Artificial Intelligence is the new threat that the world is contemplating now; but this is only the beginning

Recent advances in Generative Artificial Intelligence (AI) have captured the imagination of the public, businesses and governments alike. The Government of India has also, very recently, released a comprehensive report on the opportunities afforded by this current wave of AI. Leaders of the IT industry in India are almost certain that this wave of AI will lead to fundamental changes in the skills landscape, and implicitly, in terms of underlying threats and dangers.

Scant understanding of the implications

Concurrently, there is an exponential explosion of digital uncertainty. Few are able to fully comprehend the nature of the new threat, the likes of which have not been witnessed in past decades, if not centuries. Few also realize the grave implications of what it means to have our lives and our economies run on what may be described as fertile digital topsoil. Even fewer realize the kind of intrinsic problems that result from this.

It is oft-repeated that digital infrastructure is built on layers upon layers of omniscient machine intelligence, human coded software abstractions, and undependable hardware components. Each of the layers interconnect through complex and deeply embedded protocols. The narrow aperture of understanding of such aspects means that the vast majority of people are ignorant of the implications. Even less understood is that complexity of this kind begets vulnerabilities.

While cyber has, no doubt, attracted a measure of attention, there is little — or true — understanding of the nature of today’s cognitive warfare. Cognitive warfare truly ranks alongside other elements of modern warfare such as the domains of maritime, air and space. Cognitive warfare puts a premium on sophisticated techniques that are aimed at destabilizing institutions, especially governments, and manipulation, among other aspects, of the news media by powerful non-state actors. It entails the art of using technological tools to alter the cognition of human targets, who are often unaware of such attempts. The end result could be a loss of trust apart from breaches of confidentiality and loss of governance capabilities. Even more dangerous is that it could alter a population’s behavior using sophisticated psychological techniques of manipulation.

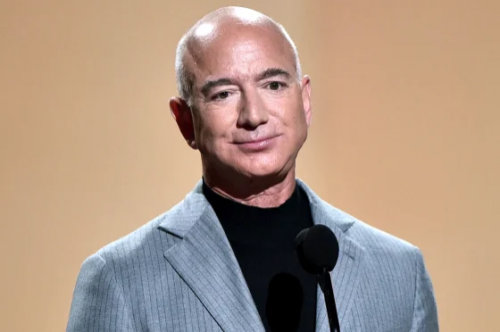

Given the maze of emerging technologies, both businesses and governments today confront an Armageddon of sorts. The methods employed are highly insidious. For example, today, with almost a third of companies in the more advanced countries of the world investing more in intangible assets than the physical one, they are putting themselves directly at risk from AI. Another estimate is that with over 50% of the market value of the top 500 companies sitting in intangibles, they too are deeply vulnerable. As firms, large and small, spend billions of dollars to migrate to the Cloud, and more and more sensors constantly send out sensitive information, the risks go up in geometrical progression. All this portends a dark, rather than a brave, new world order that we hope to inhabit.

Hence, digital uncertainty is morphing into radical uncertainty and rather rapidly. Today, government and government agencies are spending significant resources to undo the impact of misinformation and disinformation, but this may not be enough. There is not enough understanding of how the very nature of information is being manipulated and the extent to which AI drives many of these drastic transformations. All this contributes to what can only be referred to as ‘truth decay’.

The emergence of AGI

If AI is the grave threat that the world is currently contemplating, we are only witnessing the tip of the iceberg. As growing numbers of people — cognitively and psychologically — become dependent on digital networks, AI is able to influence many critical aspects of their thinking and functioning. What is simultaneously exhilarating and terrorizing is the fact that many advances in AI are now being birthed by the machine itself. Sooner rather than later, we will witness the emergence of Artificial General Intelligence (AGI) — Artificial Intelligence that is equal and or superior to human intelligence, which will penetrate whole new sectors and replace human judgment, intuition and creativity.

The impending dawn of AGI is far more disruptive and dangerous than anything else that we have encountered thus far. There is real fear that it could alter the very fabric of nation-states, and tear apart real and imagined communities across the globe. Social and economic inequalities will rise exponentially. Social anarchy will rule the streets as we see happening in some of the cities closest to the epicenter of technological innovation. It has an inherent capacity to flood a country with fake content masquerading as truth, and for imitating known voices with false ones that sound eerily familiar. This could lead to a breakdown of the concept of trust — of what is said, read, or heard — and could lead to overturning the trust pyramid with catastrophic consequences.

AGI will enable highly autonomous systems that outperform humans in many areas, including economically (valuable) work, education, social welfare and the like. AGI systems will have the potential to be able to make decisions that are unpredictable and uncontrollable which could have unintended consequences, often with harmful outcomes. It is difficult to comprehend at this point its many manifestations, but job displacements and economic displacements would be initial symptoms of what could become a tsunami of almost all human-related activity. Digital data could in turn become converted into digital intelligence, enlarging the scope for disruption and the reining in of entire sectors. It would enhance inequalities and exacerbate social disparities, and worsen economic disparities.

Hence, AGI could prove to be as radical a game-changer in the world of the 21st century as the Industrial Revolution was in the 18th century. It is almost certain to lead to material shifts in the geo-political balance of power, and in a way never comprehended previously. The specter of digital colonization looms large with AGI-based power centers being based in a few specific locations. Consequently, AGI-driven disruption could precipitate the dawn of the age of digital colonialism. This would lead to a new form of exploitation, viz., data exploitation. In its most egregious form, it would entail export of raw data and import of value-added products that use this data. In short, AGI-based concentration of power would have eerie similarities to the old East India Company syndrome.

We could possibly be at the cusp of an ‘Oppenheimer Moment’, when the world is at a crossroads in the science of computing, communicating and engineering, and the ethics of a new technology whose power and potential we do not fully comprehend. Reining in, or even halting, the development of the most advanced forms of AGI, or disallowing unfettered experimentation with the technology may not be easy, but the alternative is that it has the potential to shape the nature of the world in a manner well beyond what can be anticipated. Today, AGI seems to imitate forms of reasoning with a power to approximate the way humans think. This is a new kind of arms race, but of a different kind, and it has just begun. It, perhaps, calls for more intimate collaboration between states and the technology sector, which is easier said than done.

The Hamas-Israel conflict

A final word. AI can be exploited and manipulated skilfully in certain situations, as was possibly the case in the current Hamas-Israeli conflict, sometimes referred to as the Yom Kippur War 2023. Israel’s massive intelligence failure is attributed by some experts to an overindulgence of AI by it, which was skillfully exploited by Hamas. AI depends essentially on data and algorithms, and Hamas appears to have used subterfuges to conceal its real intentions by distorting the flow of information flowing into Israeli AI systems. Hamas, some experts claim, was thus able to blindside Israeli intelligence and the Israeli High Command. The lesson to be learnt is that an over-dependence on AI and a belief in its invincibility could prove to be as catastrophic as ‘locking the gates after the horse has bolted’.

M.K. Narayanan, a former Director, Intelligence Bureau, a former National Security Adviser, former Governor of West Bengal, and formerly Executive Chairman of CyQureX Pvt. Ltd., a U.K.-U.S.A. cyber security joint venture