In the spring of 2020, when the COVID-19 pandemic erupted, policymakers hailed contact-tracing apps as one of the most promising ways that digital technologies could help control its spread. Governments could monitor people’s diagnoses and, later, their test results and locations partly through self-reporting; alert those infected as well as others with whom they had come in contact; and develop disease-spread scenarios to support decision-making by using apps and A.I.-powered platforms. Apple and Google even announced a historic joint effort to develop technology that health authorities could use to build the apps “with user privacy and security central to the design.”

However, contact tracing apps have enjoyed, at best, mixed success worldwide, and have been a failure in the U.S. They haven’t helped much for one key reason: People don’t trust companies and governments to collect, store, and analyze personal data, especially about their health and movements. Although the world’s digital giants developed them responsibly, and the technology works as it is meant to, the apps didn’t catch on because society wasn’t convinced that the benefits of using them were greater than the costs, even in pandemic times.

The concept of a social license—which was born when the mining industry, and other resource extractors, faced opposition to projects worldwide—differs from the other rules governing A.I.’s use. Academics such as Leeora Black and John Morrison, in the book The Social License: How to Keep Your Organization Legitimate, define the social license as “the negotiation of equitable impacts and benefits in relation to its stakeholders over the near and longer term. It can range from the informal, such as an implicit contract, to the formal, like a community benefit agreement.”

Fairness and transparency. If business is to be answerable for its use of A.I., it must be able to justify the manner in which its algorithms work, and be able to explain the resulting outcomes to prove its A.I. algorithms are fair and transparent. An A.I.-based recruitment system, for instance, should be able to demonstrate that all the candidates that provided the same, or similar, responses to a question posed by the machine on different days received the same rating or score.

Benefit. Companies must ensure that stakeholders share their perception that the advantages of using A.I. are greater than the costs of doing so. They can measure the trade-offs at the individual, company, and societal levels, comparing the benefits of better health, convenience, and comfort in health care, for instance, with the potential downsides such as security, privacy, and safety. Society may not always sanction A.I.’s use, and business should be ready for that. For instance, at the starts of the pandemic, the idea of merging all the European Union members’ health care databases was mooted. It wasn’t obvious that the benefits would be greater than the costs of doing so, though, so the idea was quietly killed.

Trust. Society must be convinced that companies that plan to use A.I. can be trusted with data acquisition, and will be accountable for their algorithms’ decisions. Trust is critical for social acceptance, especially when the A.I. can work without human supervision. That’s one reason society has been slow to endorse self-driving automobiles. People believe that the industry incumbents can create reliable mechanical vehicles, but they’re not convinced of the former’s ability to develop digital self-driving technologies yet.

The article was originally posted at Fortune.

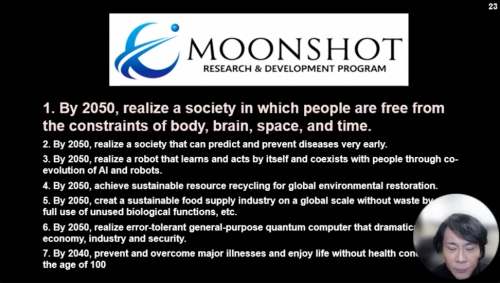

The Boston Global Forum (BGF), in collaboration with the United Nations Centennial Initiative, released a major work entitled Remaking the World – Toward an Age of Global Enlightenment. More than twenty distinguished leaders, scholars, analysts, and thinkers put forth unprecedented approaches to the challenges before us. These include President of the European Commission Ursula von der Leyen, Governor Michael Dukakis, Father of Internet Vint Cerf, Former Secretary of Defense Ash Carter, Harvard University Professors Joseph Nye and Thomas Patterson, MIT Professors Nazli Choucri and Alex ‘Sandy’ Pentland, and Vice President of European Parliament Eva Kaili. The BGF introduced core concepts shaping pathbreaking international initiatives, notably, the Social Contract for the AI Age, an AI International Accord, the Global Alliance for Digital Governance, the AI World Society (AIWS) Ecosystem, and AIWS City.