MIT Techreview briefs about an ultra-fast image sensor with a built-in neural network that has been developed at TU Wien (Vienna), which can be trained to recognize certain objects and has now been presented in ‘Nature’

The news: A new type of artificial eye, made by combining light-sensing electronics with a neural network on a single tiny chip, can make sense of what it’s seeing in just a few nanoseconds, far faster than existing image sensors.

Why it matters: Computer vision is integral to many applications of AI—from driverless cars to industrial robots to smart sensors that act as our eyes in remote locations—and machines have become very good at responding to what they see. But most image recognition needs a lot of computing power to work. Part of the problem is a bottleneck at the heart of traditional sensors, which capture a huge amount of visual data, regardless of whether or not it is useful for classifying an image. Crunching all that data slows things down.

A sensor that captures and processes an image at the same time, without converting or passing around data, makes image recognition much faster using much less power. The design, published in Nature today by researchers at the Institute of Photonics in Vienna, Austria, mimics the way animals’ eyes pre-process visual information before passing it on to the brain.

How it works: The team built the chip out of a sheet of tungsten diselenide just a few atoms thick, etched with light-sensing diodes. They then wired up the diodes to form a neural network. The material used to make the chip gives it unique electrical properties so that the photosensitivity of the diodes—the nodes in the network—can be tweaked externally. This meant that the network could be trained to classify visual information by adjusting the sensitivity of the diodes until it gave the correct responses. In this way, the smart chip was trained to recognize stylized, pixelated versions of the letters n, v, and z.

Limited vision: This new sensor is another exciting step on the path to moving more AI into hardware, making it quicker and more efficient. But there’s a long way to go. For a start, the eye consists of only 27 detectors and cannot deal with much more than blocky 3×3 images. Still, small as it is, the chip can perform several standard supervised and unsupervised machine-learning tasks, including classifying and encoding letters. The researchers argue that scaling the neural network up to much larger sizes would be straightforward.

The original article can be found here.

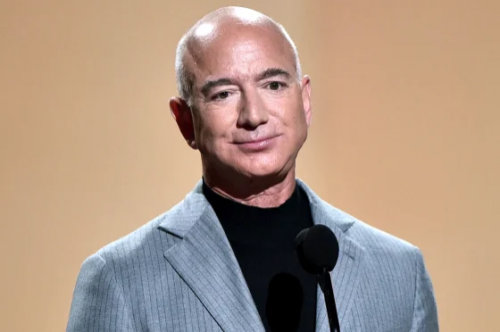

AIWS Innovation Network connects distinguished professors and innovators from top universities to build the social contract 2020, and a monitoring system to supervise the use of AI by governments, large corporations. At Policy Dialogue “Transatlantic Approaches on Digital Governance: A New Social Contract in Artificial Intelligence”, professor Nazli Choucri, MIT, will talk about the Social Contract 2020.