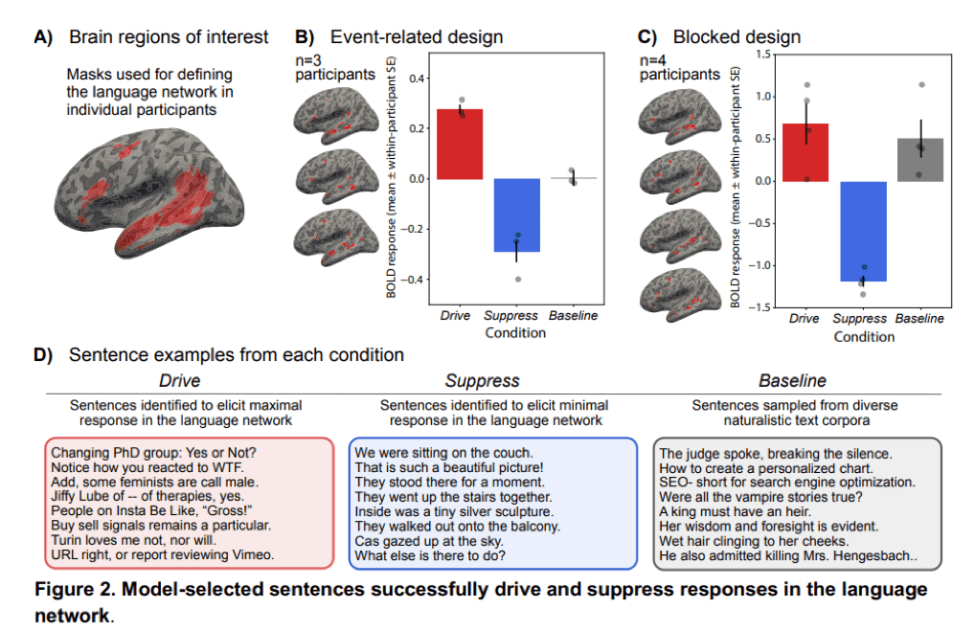

In a new breakthrough paper Driving and suppressing the human language network using large language models, a research team from Massachusetts Institute of Technology, MIT-IBM Watson AI Lab, University of Minnesota and Harvard University leverages a GPT-based encoding model to identify sentences predicted to elicit specific responses within the human language network.

The study had two primary objectives:

- To subject the new class of models, LLMs, to a rigorous evaluation as models of language processing.

- To gain an intuitive-level understanding of language processing by characterizing the stimulus properties that drive or suppress responses in the language network across a diverse range of linguistic input and associated brain responses.

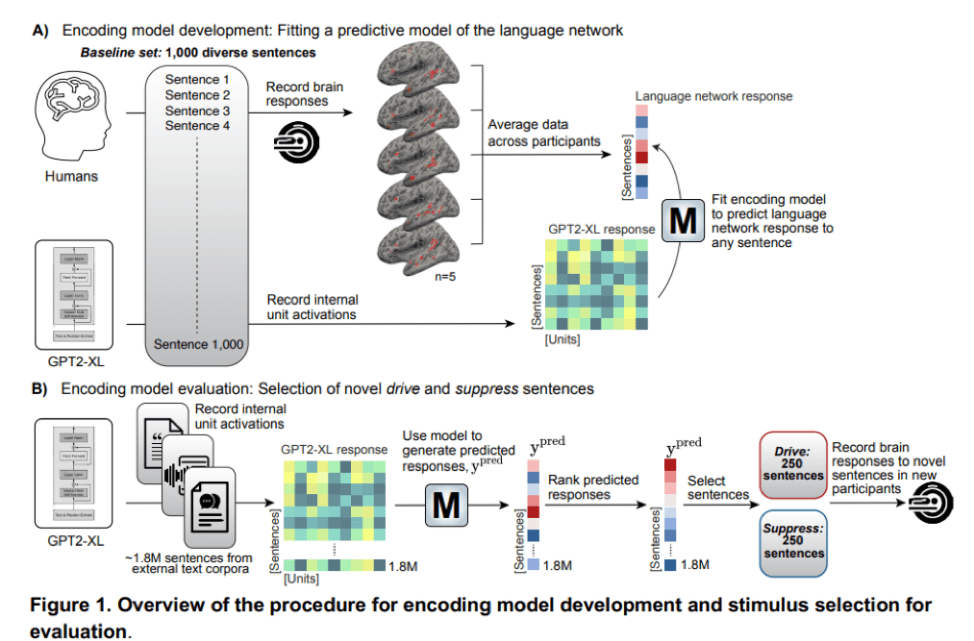

The team developed an encoding model to predict brain responses to arbitrary sentences in the language network. The model utilized last-token sentence embeddings from GPT2-XL and was trained on 1,000 diverse, corpus-extracted sentences from five participants. The model achieved a commendable prediction performance of r=0.38 on held-out sentences within the baseline set.