For cutting-edge AI researchers looking for clean semantics models to represent the context-specific causal dependencies essential for causal induction, this DeepMind’s algorithm encourages you to look at good old-fashioned probability trees.

The probability tree diagram is used to represent a probability space. Tree diagrams illustrate a series of independent events or conditional probabilities.

Humans naturally learn reasoning to a great extent through inducing causal relationships from observations, and according to cognitive scientists, we do it quite well. Even with sparse and limited data, humans can quickly learn causal structures (such as observations of the co-occurrence frequencies between causes and effects, interactions between physical objects, etc.).

Causal induction is a classic problem in ML and statistics. Models, such as causal Bayesian networks (CBNs), can describe the causal dependencies for causal induction. But CBNs are not capable of representing context-specific independencies. According to the DeepMind team, the algorithms cover the whole causal hierarchy and operate on random propositional and causal events, expanding the causal reasoning to “a very general class of discrete stochastic processes.”

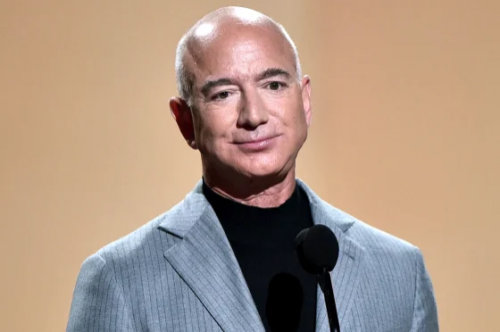

In the field of AI and Causal Reasoning, Professor Judea Pearl is a pioneer for developing a theory of causal and counterfactual inference based on structural models. In 2011, Professor Pearl won the Turing Award, computer science’s highest honor. In 2020, Michael Dukakis Institute also awarded Professor Pearl as World Leader in AI World Society (AIWS.net) for Leadership and Innovation (MDI) and Boston Global Forum (BGF). At this moment, Professor Judea also contributes to Causal Inference for AI transparency, which is one of important AIWS.net topics on AI Ethics.