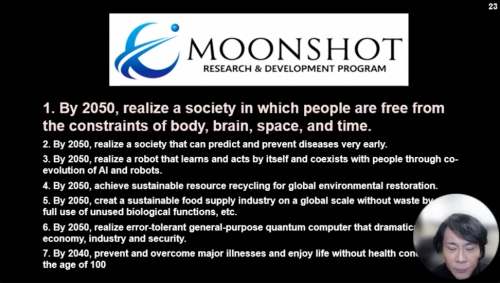

Many countries are now competing to utilize AI, or artificial intelligence in the military sphere. But that may lead to a nightmare– a world where AI-powered weapons kill people based on their own judgement, and without any human intervention.

What are full autonomous lethal weapons?

Full autonomous lethal weapons powered by AI are now becoming the biggest issue as they are becoming an actual possibility with rapid advancement in technology.

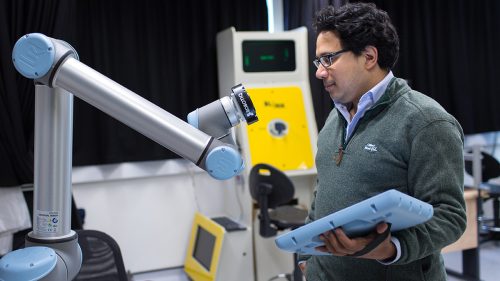

It’s different from Armed UAVs(unmanned aerial vehicles) already deployed in actual warfare… the UAVs are remotely controlled by human, who make final decisions about where and when to attack.

On the other hand, autonomous AI weapons would be able to make decisions without human intervention.

It is estimated that at least 10 countries are developing AI-equipped weapons. The United States, China, and Russia, in particular, are engaging in fierce competition. They believe that AI will be key in determining which country will be better positioned than others. Concerns are growing that the competition could lead to a new phase of the arms race.

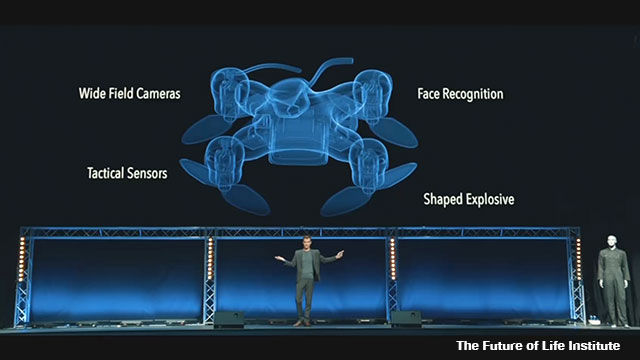

A non-governmental organization calling for a ban on such weapons produced a video to demonstrate how dangerous these AI weapons could be.

The video shows a palm-sized drone that uses an AI-based facial recognition system to identify human targets and kill them by penetrating their skulls.

A swarm of micro-drones is released from a vehicle flying to a target school, killing young people one after another as they try to flee.

The NGO warns that AI-based weapons may be used as a tool in terrorist attacks, not just in armed conflicts between states.

This video is a complete fiction, but there are moves toward using swarms of such drones in actual military activities.

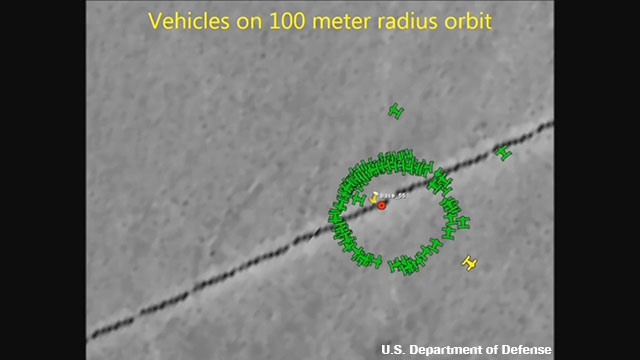

In 2016, the US Department of Defense tested a swarm of 103 AI-based micro-drones launched from fighter jets. Their flights were not programmed in advance. They flew in formation without colliding, using AI to assess the situation for collective decision-making.

Radar imagery shows a swarm of green dots — drones — flying together, creating circles and other shapes.

An arms maker in Russia developed an AI weapon in the shape of a small military vehicle and released its promotional video. It shows the weapon finding a human-shaped target and shooting it. The company says the weapon is autonomous.

The use of AI is also eyed to be applied to the command and control system. The idea behind it is to have AI help identify the most effective ways of troop deployment or attacks.

The United States and other countries developing AI arms technology say the use of fully autonomous weapons will avoid casualties of their service members. They also say it will also reduce human errors, such as bombing wrong targets.

Warnings from scientists

But many scientists disagree. They are calling for a ban on autonomous AI lethal weapons. Physicist Stephen Hawking, who died last year, was one of them.

Just before his death, he delivered a grave warning. What concerned him, he said, is that AI could start evolving on its own, and “in the future, AI could develop a will of its own, a will that is in conflict with ours.”

There are several issues concerning AI lethal weapons. One is an ethical problem. It goes without saying that humans killing humans is unforgivable. But the question here is whether robots should be allowed to make a decision on human lives.

Another concern is that AI could lower the hurdles to war for government leaders because it would reduce the war costs and loss of their own service men and women.

Proliferation of AI weapons to terrorists is also a grave issue. Compared with nuclear weapons, AI technology is far less costly and more easily available. If a dictator should get access to such weapons, they could be used in a massacre.

Finally, the biggest concern is that humans could lose control of them. AI devices are machines. And machines can go out of order or malfunction. They could also be subject to cyber-attacks.

As Hawking warned, AI could rise against humans. AI can quickly learn how to solve problems through deep learning based on massive data. Scientists say it could lead to decisions or actions that go beyond human comprehension or imagination.

In the board games of chess and go, AI has beaten human world champions with unexpected tactics. But why it employed those tactics remains unknown.

In the military field, AI might choose to use cruel means that humans would avoid, if it decides that would help to achieve a victory. That could lead to indiscriminate attacks on innocent civilians.

High hurdles for regulation

The global community is now working to create international rules to regulate autonomous lethal weapons.

Arms control experts are seeking to use the Convention on Certain Conventional Weapons, or CCW, as a framework for regulations. The treaty bans the use of landmines, among other weapons. Officials and experts from 120 CCW member countries have been discussing the issue in Geneva. They held their latest meeting in March.

They aim to impose regulations before specific weapons are created. Until now, arms ban treaties have been made after landmines and biological and chemical weapons were actually used and atrocities were committed. In the case of AI weapons, it would be too late to regulate them after fully autonomous lethal weapons come into existence.

The talks have been continuing for more than five years. But delegates have even failed to agree on how to define “autonomous lethal weapons.”

Some voice pessimism that regulating AI weapons with a treaty is no longer viable. They say as talks stall, technology will make quick progress and weapons will be completed.

Sources say discussions in Geneva are moving toward creating regulations less strict than a treaty. The idea is for each country to pledge to abide by international humanitarian laws, then, create its own rules and disclose them to the public. It is hoped that this will act as a brake.

In February, the US Department of Defense released its first Artificial Intelligence Strategy report. It says AI weapons will be kept under human control, and be used without violating international laws and ethics.

But challenges remain. Some question whether countries will interpret international laws in their favor to make regulations that suit them. Others say it may be difficult to confirm that human control is functioning.

Humans have created various tools that are capable of indiscriminate massacres, such as nuclear weapons. Now, the birth of AI weapons that could be beyond human control are steering us toward an unknown dangerous domain.

Whether humans will be able to recognize the possible crisis and put a stop to it before it turns into a catastrophe is critical. It appears that human wisdom and ethics are now being tested.