Machine learning-driven photography together with 3-D sensors’ smartphones will both correct shortcomings of smartphone pictures and also provide some stunning new aspects of photography.

It is possible to take a single image and infer what’s in the scene that’s occluded by another object. Called a “layered depth image,” it can create new scenes by removing an object from a photo, revealing the background that the camera never saw, but that was computed from the image. The approach uses the familiar encoder-decoder approach found in many neural network applications, to estimate the depth of a scene, and a “generative adversarial network,” or GAN, to construct the parts of the scene that was never actually in view when the picture was taken.

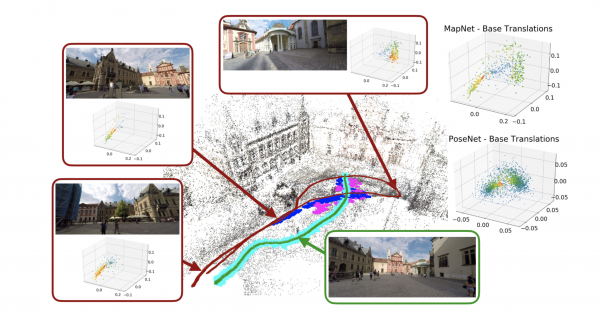

Down the road, there will be another stage that will mean a lot in terms of advancing machine learning techniques. It is possible to forego the use of 3-D sensors and just use a convolutional neural network (CNN), to infer the coordinates in space of objects. That would save on the expense of building the sensors into phones.

In the future, with enough 3-D shots, the CNN, or whatever algorithm is used, will be smart enough to look at the world and know exactly what it is like even without help from 3-D depth perception. According to AI World Society (AIWS), AI is a new technology wave and has a strong impact on many aspects of our daily life by enhancing human values with more transparent and accurate information.