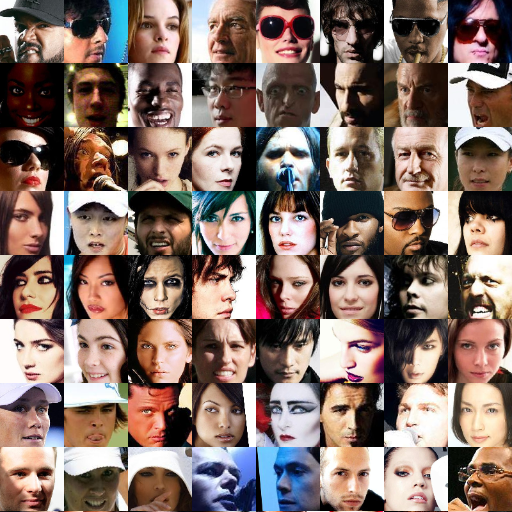

Lately, AI systems are known that they may be unfair because their programs have identical prejudiced views widespread in society. An algorithm developed by engineers from MIT CSALL may eliminate the bias from AI.

The algorithm can identify and minimize any hidden biases by learning to understand a specific task such as the basic structure of the training data or face recognition. Following testing, the algorithm was able to reduce ‘categorical bias’ by over 60%, while performance remained stable.

Unlike other approaches that require human input for specific biases, the MIT team’s algorithm is able to test datasets, identify any biases and automatically retrieve template again without needing a programmer in the loop.

“Facial classification in particular is a technology that’s often seen as ‘solved,’ even as it’s become clear that the datasets being used often aren’t properly vetted,” said Alexander Amini, a co-author of the study.

“Rectifying these issues is especially important as we start to see these kinds of algorithms being used in security, law enforcement and other domains.”

According to Amini, the de-bias algorithm would be particularly relevant for very large datasets which would be too expansive to be vetted by humans.

Giving AI the capability of reasoning and adaptability will be a breakthrough for the industry. but it also means AI will be given a significant control of itself and its actions, which without thorough consideration, might lead to unforeseen consequences. This problem requires monitoring and regulations on AI. Currently, professors and researchers at MDI are working on building an ethical framework for AI to guarantee the safety of AI deployment.