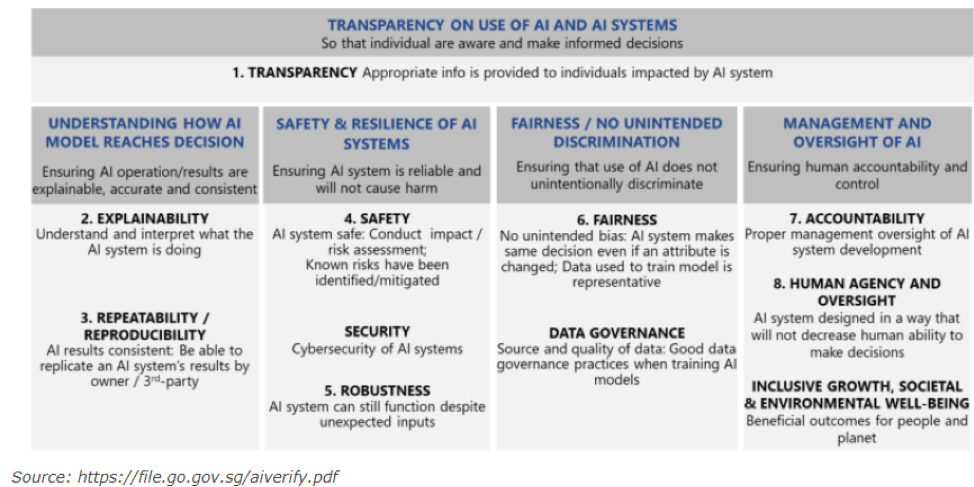

Singapore-based researchers launch the first AI Governance Testing Framework and Toolkit for organizations looking to demonstrate responsible AI measurably. An early-stage product called AI Verify attempts to increase trust between businesses and their stakeholders by performing technological testing and process audits in conjunction with each other.

There is a constant need for the public to be assured that AI systems are fair, explainable, safe, and accountable; as more products and services use AI to personalize or make autonomous predictions. The objective is to increase public confidence in AI while encouraging its more comprehensive application. Voluntary AI governance frameworks and guidelines have been published to help system owners and developers implement trustworthy AI products and services.

Understanding how AI models make judgments and if the AI predictions models make have any unintentional bias is a vital part of transparency. AI systems should be held accountable and subject to scrutiny.

The original article was posted here.

The Boston Global Forum (BGF), in collaboration with the United Nations Centennial Initiative, released a major work entitled Remaking the World – Toward an Age of Global Enlightenment. More than twenty distinguished leaders, scholars, analysts, and thinkers put forth unprecedented approaches to the challenges before us. These include President of the European Commission Ursula von der Leyen, Governor Michael Dukakis, Father of Internet Vint Cerf, Former Secretary of Defense Ash Carter, Harvard University Professors Joseph Nye and Thomas Patterson, MIT Professors Nazli Choucri and Alex ‘Sandy’ Pentland, and Vice President of European Parliament Eva Kaili. The BGF introduced core concepts shaping pathbreaking international initiatives, notably, the Social Contract for the AI Age, an AI International Accord, the Global Alliance for Digital Governance, the AI World Society (AIWS) Ecosystem, and AIWS City.