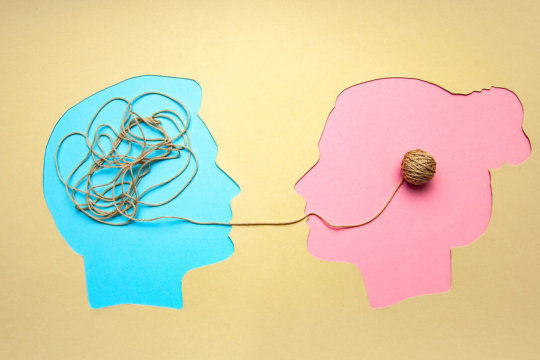

By following somebody’s cerebrum movement, this innovation can reproduce the words that an individual hears with extraordinary lucidity. This leap forward tackles the intensity of discourse amalgamation and man-made consciousness. It additionally establishes the framework for helping the individuals who can not address recapture the capacity to speak with the outside world.

This research study is led by Prof. Nima Mesgarani, a principal investigator at Columbia University’s Mortimer B. Zuckerman Mind Brain Behavior Institute.

This research is to combine the recent advances in deep learning (deep neural network) with the latest innovations in speech synthesis technology to reconstruct intelligence speech from human auditory cortex. This approach has been demonstrated with positive results for the next generation of speech Brain-Computer Interface (BCI) system, which can not only restore communications for paralyzed patients but also have the potential to transform human-computer interaction technologies.

Future breakthroughs that the technology could lead to include a wearable brain-computer interface that could translate an individual’s thoughts, such as ‘I need a glass of water’, directly into synthesized speech or text. “This would be a game changer,” said Prof Mesgarani. “It would give anyone who has lost their ability to speak, whether through injury or disease, the renewed chance to connect to the world around them.”

In the primary logical investigation, Columbia neuroscientists made a framework that makes an interpretation of straightforward, conspicuous words into words. By following somebody’s cerebrum movement, innovation can reproduce the words that an individual hears with extraordinary lucidity. This leap forward tackles the intensity of discourse amalgamation and man-made consciousness. It additionally establishes the framework for helping the individuals who cannot address recapture the capacity to speak with the outside world.

These discoveries have been distributed in logical reports. “Our voice associates us with companions, family and our general surroundings,” said Nima Mesgarani, Ph.D., the lead writer of the article and a key examiner of the article at Columbia University’s Mortimer B. Zuckerman Institute for Brain Behavior Research. “With the present research, we have a potential method to reestablish that capacity. We have appeared, with the correct innovation, these individuals’ musings can be deciphered and comprehended by anybody audience members. ”

Many years of research have demonstrated that when individuals talk – or even envision talking – narrating action models show up in their cerebrums. Specialists, attempt to record and interpret these models with the goal that they can be converted into words.

Be that as it may, finishing this accomplishment demonstrated testing. The underlying endeavors to unravel mind signals neglected to make anything like straightforward words. Dr. Mesgarani’s gathering has changed to a profession, a PC calculation that can integrate discourse in the wake of being prepared on the accounts of the speakers.

“This is a similar innovation utilized by Amazon Echo and Apple Siri to give verbal solutions to our inquiries,” said Dr. Mesgarani, additionally an associate teacher of an electrical building at the School of Engineering and Science, getting familiar with Columbia’s Fu Foundation Application.

To instruct individuals to articulate the cerebrum’s action, Dr. Mesgarani teamed up with Ashesh Dinesh Mehta, MD, Dr., a neurosurgeon at the Northwell Medical Neurology Institute.

“Working with Dr. Mehta, we have requested that epilepsy patients experience mind medical procedure to tune in to the idioms of various individuals, while we measure the examples of cerebrum action”, Dr. Mesgarani said. “Apprehensive models have prepared the elocution.”

Next, the specialists requested that comparative patients tune in to the speaker perusing the digits somewhere in the range of 0 and 9 while recording cerebrum flags that could be gone through the articulation. The final product is a voice that sounds like a robot perusing a series of numbers. To check the precision of the chronicle, Dr. Mesgarani and his group have allocated people to tune in to the accounts and report what they hear.

“We found that individuals can comprehend and rehash sounds about 75% of the time, higher and go past every single past exertion,” Dr. Mesgarani said. “Delicate elocution set and incredible neural system speak to the sound that patients at first heard with astounding exactness.”

Dr. Mesgarani and his group intend to test the following increasingly complex words and sentences, and they need to run comparable tests on cerebrum signals transmitted when one talks or envisions talking.

“In this situation, if the wearer supposes ‘I require a glass of water’, our framework can take the cerebrum flag produced by that idea and transform them into blended words, verbally,” Dr. Mesgarani said. “This will be a distinct advantage. It will enable any individual who can not talk, regardless of whether through damage or ailment, the chance to enhance to interface with their general surroundings.”

What we expect came true finally. This success will especially make the AIWS ideas come true. People will be more honest; unable to say something different from what they are thinking, and cannot be false. The cultural values of AIWS to build a faithful and honest society now have the opportunity to implement, to make AIWS a reality.

According to Mr. Nguyen Anh Tuan – Director of The Michael Dukakis Institute for Leadership and Innovation, Co-Founder, and Chief Executive Officer of The Boston Global Forum: “What we expect came true finally”.

“This success will especially make the AIWS ideas come true. People will be more honest, unable to say something different from what they are thinking, not be false. The cultural values of AIWS to build a faithful and honest society now have the opportunity to implement, to make AIWS a reality,” said Mr. Nguyen Anh Tuan.