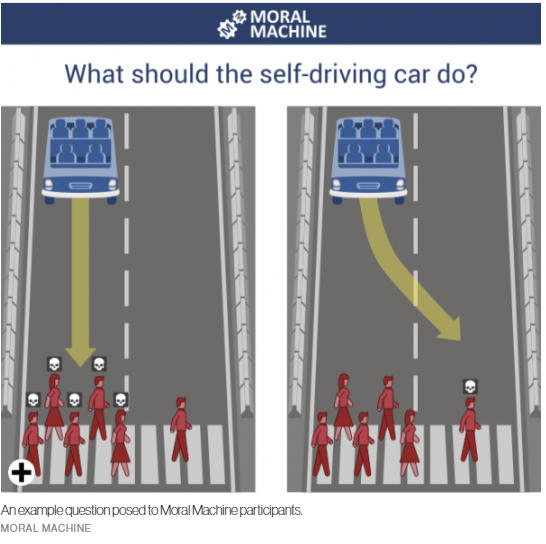

In 2014, The Moral Machine was launched by MIT Media Lab to generate data on people’s insight into priorities from different cultures.

After four years of research, millions of people in 233 countries have made 40 million decisions, making it one of the largest studies ever done on global moral preferences. A “trolley problem” was created to test people’s opinion. It put people in a case where they are on a highway, then must encounter different people on the road. Which life should you take to spare the others’ lives? The car is put into 9 different circumstance should a self-driving car prioritize humans over pets, passengers over pedestrians, more lives over fewer, women over men, young over old, fit over sickly, higher social status over lower, law-abiders over law-benders.

The data reveals that the ethical standards in AI varies across different culture, economics and geographic location. While collectivist cultures like China and Japan are more likely to spare the old, countries with more individualistic cultures are more likely to spare the young.

In terms of economics, participants from low-income countries are more tolerant of jaywalkers versus pedestrians who cross legally. However, in countries with a high level of economic inequality, there is a gap between the way they treat individuals with high and low social status.

Different from the past, today we have machines that can learn through experience and have minds of their own. This requires comprehensive standards to regulate and control unpredictable disruptions from Artificial Intelligence, which is also the shared aim of researches conducted by serious and major independent organizations such as the MIT Media Lab, IEEE, etc. and the Michael Dukakis Institute.