KTH Royal Institute of Technology in Stockholm has built a bot called Repairnator that surpass human in finding bugs and writing bug fixes.

A robot programmer known as Repairnator, can fix bugs so well that it can compete with human engineers. This can be considered a milestone in software engineering research on automatic program repair for developers.

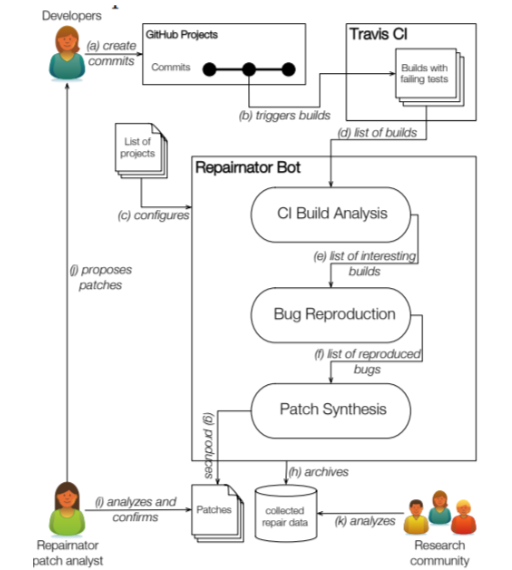

Martin Monperrus and his team – the creators of the bot tested it by having Repairnator pretend to be a human developer and allow it to compete with other human developers to patch on GitHub, a website for developers. “The key idea of Repairnator is to automatically generate patches that repair build failures, then to show them to human developers, to finally see whether those human developers would accept them as valid contributions to the code base,” said Monperrus.

The team created a GitHub user called Luc Escape which disguised as a software engineer at their research facility. “Luc has a profile picture and looks like a junior developer, eager to make open-source contributions on GitHub,” they say. Then, they customized Repairnator as Luc whose appearance like a junior developer.

Repairnator were put through 2 tests. The first took place from February to December 2017, which required the bot to fix 14,188 GitHub projects, and scan for errors. In the given period, Repairnator analyzed over 11,500 builds with failures among 11,500 failures, it was able to reproduce failures in 3,000 cases. and developed a patch in 15 cases. However, none of those was usable because it took Repairnator a great deal of time to develop them and the low-quality of the patches was not acceptable.

In the second test, “Luc” was set to work on the Travis continuous integration service from January to June 2018. After a few adjustments, on January 12 it wrote a patch that a human moderator accepted into a build. In six months, Repairnator went on to produce five patches that are acceptable, which means it can actually compete with human in this field of work. However, they encountered an issue when the team received the following message from one of the developers: “We can only accept pull-requests which come from users who signed the Eclipse Foundation Contributor License Agreement.” Since Luc cannot sign a license agreement. “Who owns the intellectual property and responsibility of a bot contribution: the robot operator, the bot implementer or the repair algorithm designer?” asked Monperrus and his colleagues. The bot could bring about enormous potential to the industry; however, this can pose challenges to rights and intellectual property and responsibility.

The issues related to rights and responsibilities of AI systems are studied in the AIWS Initiative of the Michael Dukakis Institute for Leadership and Innovation (MDI). Layer 3 of The AIWS 7-Layer Model focuses primarily on AI development and resources, including data governance, accountability, development standards, and the responsibility for all practitioners involved directly or indirectly in creating AI.