The US Defense Department produced the first tools for catching deepfakes called Media Forensics. This may start an arms race between counterfeiters and governmental agents.

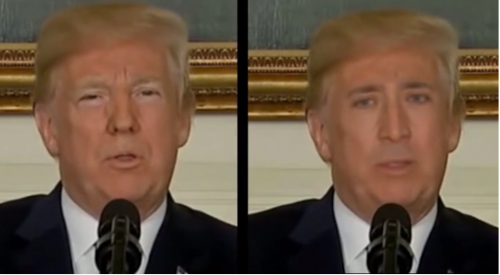

Techniques for faking facial gestures are now easier to implement than ever. The creation of this so-called deepfake might appeal to many internet users, but there are also significant consequences. Since deepfakes are usually used to create fake celebrity pornographic videos, to produce “revenge porn,” and even to misrepresent well-known politicians, there are tremendous risks to individuals’ reputation.

To generate these fake videos, developers use a machine-learning technique known as generative modeling, which allows computers to access to images of a real individual before creating the fake model.

On this issue, the US Defense Department’s program, Defense Advanced Research Projects Agency (DARPA), have developed tools, known as Media Forensics, for catching deepfakes. They are building on work by a team, led by Siwei Lyu, a professor at the State University of New York at Albany, discovered that deepfakes rarely blink, which is because deepfakes are trained on still images, making the imitations look unnatural. “We are working on exploiting these types of physiological signals that, for now at least, are difficult for deepfakes to mimic,” said Hany Farid, a leading digital forensics expert at Dartmouth College.

This invention of the US Defense Department may start an AI-based arms race, since there will be confrontations between video forgers and digital sleuths. Another problem is that the machine-learning system can be trained to outsmart the forensics tools. Therefore, issues of morality and responsibility become more important. That is why MDI has been focusing on building an ethical framework and AI standards for the AI World Society (AIWS).